How to cut replatforming costs with Composable Commerce

The world of commerce moves quickly. Today’s best-in-class platforms may not align with tomorrow’s needs. For many businesses, evolving beyond their legacy tech means incurring...

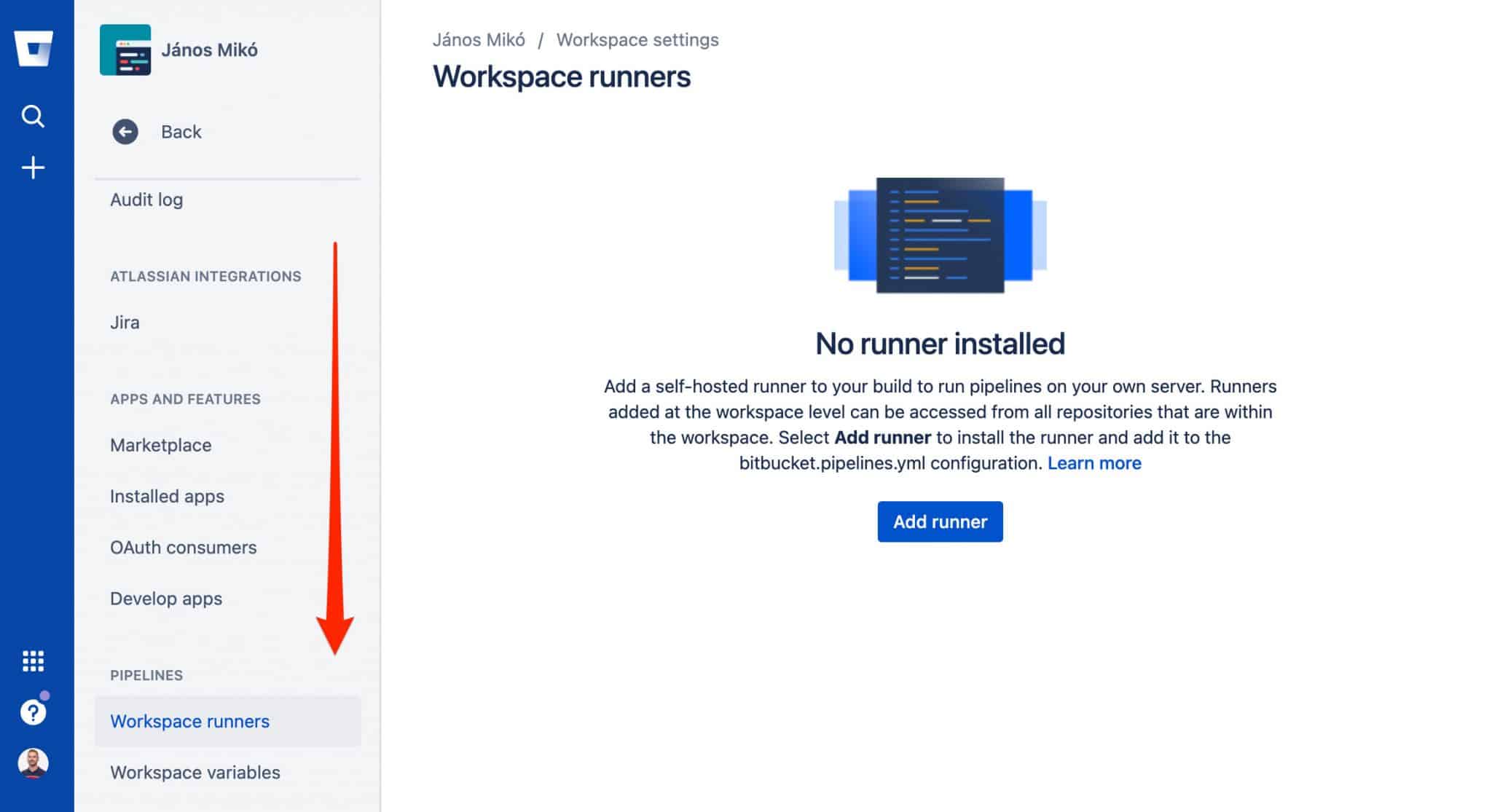

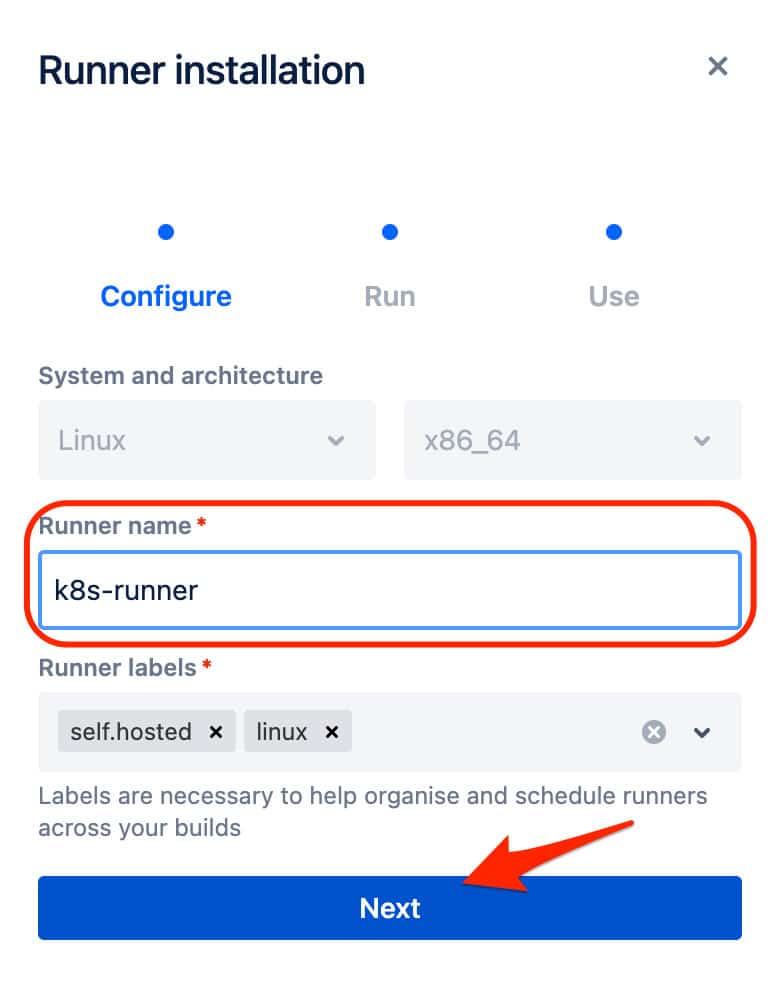

Take back control of your Continuous Integration (CI) and Continuous Deployment (CD) pipelines by running workflows on your own infrastructure. Atlassian’s introduction of self-hosted ‘Runners’ for Bitbucket Pipelines gives developers and DevOps professionals an easy way to optimize resources, ensure compliance and tailor configurations to suit their needs when deploying containerized applications or updates; using a Kubernetes integration.

In this article, we’ll show you how to configure Workspace-level Runners, deploy them in Kubernetes, and troubleshoot common challenges that may arise during setup.

A Workspace Runner is a tool available in Bitbucket Pipelines that enables you to run your CI/CD tasks on your own infrastructure, rather than the Bitbucket server. It’s an essential building block for this process.

Below is the command to run a Docker container for Bitbucket Pipelines Runner:

docker container run -it -v /tmp:/tmp -v /var/run/docker.sock:/var/run/docker.sock \

-v /var/lib/docker/containers:/var/lib/docker/containers:ro \

-e ACCOUNT_UUID={__ACCOUNT_UUID__} \

-e RUNNER_UUID={__RUNNER_UUID__} \

-e OAUTH_CLIENT_ID=__OAUTH_CLIENT_ID__ \

-e OAUTH_CLIENT_SECRET=__OAUTH_CLIENT_SECRET__ \

-e WORKING_DIRECTORY=/tmp \

--name runner-7deb7740-f86b-50d0-9c85-671fcb3c9038 \

docker-public.packages.atlassian.com/sox/atlassian/bitbucket-pipelines-runner:1

Now that you have your Runner command output, there are a few elements to extract from the code which you will need for the Kubernetes deployment.

ACCOUNT_UUID RUNNER_UUID OAUTH_CLIENT_ID OAUTH_CLIENT_SECRET

ACCOUNT_UUID and RUNNER_UUID.OAUTH_CLIENT_SECRET (including special characters) is copied.Kubernetes requires encoded data to deploy your Runner. It uses a Base64 format-encoded format to ensure that data can be safely handled and transmitted. In this step, you’ll apply a Base64 encoding to the OAUTH variables extracted from the command output.

Use the following script to prepare the credentials for Kubernetes deployment:

export ACCOUNT_UUID=__ACCOUNT_UUID__ export RUNNER_UUID=__RUNNER_UUID__ export OAUTH_CLIENT_ID=__OAUTH_CLIENT_ID__ export OAUTH_CLIENT_SECRET=__OAUTH_CLIENT_SECRET__

export BASE64_OAUTH_CLIENT_ID=$(echo -n $OAUTH_CLIENT_ID | base64) export BASE64_OAUTH_CLIENT_SECRET=$(echo -n $OAUTH_CLIENT_SECRET | base64)

Tip: If your echo command does not support -n, use base64 –wrap=0 to handle trailing newlines.

Now that you have Base64 encoded OAUTH data ready for Kubernetes, you can move on to the deployment.

Replace placeholder variables (${VAR_NAME}) with your actual credentials. Run these commands to create the manifest files:

cat > ./secret.yaml < ./job.yaml <<EOF

apiVersion: batch/v1

kind: Job

metadata:

name: runner

spec:

template:

metadata:

labels:

accountUuid: ${ACCOUNT_UUID}

runnerUuid: ${RUNNER_UUID}

spec:

containers:

- name: bitbucket-k8s-runner

image: docker-public.packages.atlassian.com/sox/atlassian/bitbucket-pipelines-runner

env:

- name: ACCOUNT_UUID

value: "{${ACCOUNT_UUID}}"

- name: RUNNER_UUID

value: "{${RUNNER_UUID}}"

- name: OAUTH_CLIENT_ID

valueFrom:

secretKeyRef:

name: runner-oauth-credentials

key: oauthClientId

- name: OAUTH_CLIENT_SECRET

valueFrom:

secretKeyRef:

name: runner-oauth-credentials

key: oauthClientSecret

- name: WORKING_DIRECTORY

value: "/tmp"

volumeMounts:

- name: tmp

mountPath: /tmp

- name: docker-containers

mountPath: /var/lib/docker/containers

readOnly: true

- name: var-run

mountPath: /var/run

- name: docker-in-docker

image: docker:20.10.7-dind

securityContext:

privileged: true

volumeMounts:

- name: tmp

mountPath: /tmp

- name: docker-containers

mountPath: /var/lib/docker/containers

- name: var-run

mountPath: /var/run

restartPolicy: OnFailure

volumes:

- name: tmp

- name: docker-containers

- name: var-run

backoffLimit: 6

completions: 1

parallelism: 1

EOF

kubectl create namespace bitbucket-runner --dry-run -o yaml | kubectl apply -f -

kubectl -n bitbucket-runner apply -f secrets.yaml kubectl -n bitbucket-runner apply -f job.yaml

Confirm the runner status is set to ONLINE.

If you decide to delete the runner from Kubernetes, you run the following steps:

kubectl -n bitbucket-runner delete -f job.yaml kubectl -n bitbucket-runner delete -f secret.yamlkubectl delete namespace bitbucket-runner

Now your Kubernetes integration is online, all that remains is to use the runner – now capitalizing on your preferred infrastructure.

To use the runner in your pipeline, add its labels to the bitbucket-pipelines.yml file:

pipelines: custom: pipeline: - step: name: Step1 # default: 4gb, 2x: 8GB, 4x: 16GB, 8x: 32gb size: 8x runs-on: - 'self.hosted' - 'my.custom.label' script: - echo "This step will run on a self hosted runner with 32 GB of memory."; - step: name: Step2 script: - echo "This step will run on Atlassian's infrastructure as usual.";

Be vigilant for these common issues to ensure your Workspace Runner deploys correctly.

Status 500: {"message":"io.containerd.runc.v2: failed to adjust OOM score for shim: set shim OOM score: write /proc/PROC_ID/oom_score_adj: invalid argument\n: exit status 1: unknown"}

# docker --version Docker version 20.10.7, build f0df350

- name: docker-in-docker image: docker:20.10.5-dind -> docker:20.10.7-dind

- name: docker-in-docker image: docker:20.10.7-dind args: - "--mtu=1300"

definitions: services: docker: image: rewardenv/docker:20.10.7-dind variables: DOCKER_OPTS: "--mtu=1300"

Once you’ve covered these quick fixes, the build process will run without any issues.

To recap, self-hosted Runners for Bitbucket Pipelines enable you to customize your build environments and gain better control over your CI/CD workflows. While the setup involves intricate steps including handling base64 encoding, configuring Kubernetes manifests, and managing Docker network layers; the results represent a worthy return on investment.

From resolving pipeline errors to addressing MTU-related issues, this article gives you practical solutions to get your Runners up and running smoothly. If you experience any hiccups not covered in this process, don’t hesitate to get in touch and the Bluprintx team will be on-hand to provide advice and guidance – or a vision to transform your trajectory!